What is Provisioned Concurrency?

Provisioned Concurrency is a new feature of AWS Lambda in Serverless. What does it do? It minimizes the estimate of cold starts by generating execution environments ahead of usage completely up to running the initialization code. It reduces the time spent on APIs invocations tremendously. Provisioned Concurrency is very easy to use. All you need to do is define the capacity (the estimate of execution environments) and think about the time when you want to use them (see Auto Scaling). You can set this up in an AWS Lambda Console, AWS CloudFormation, and Terraform.

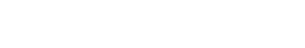

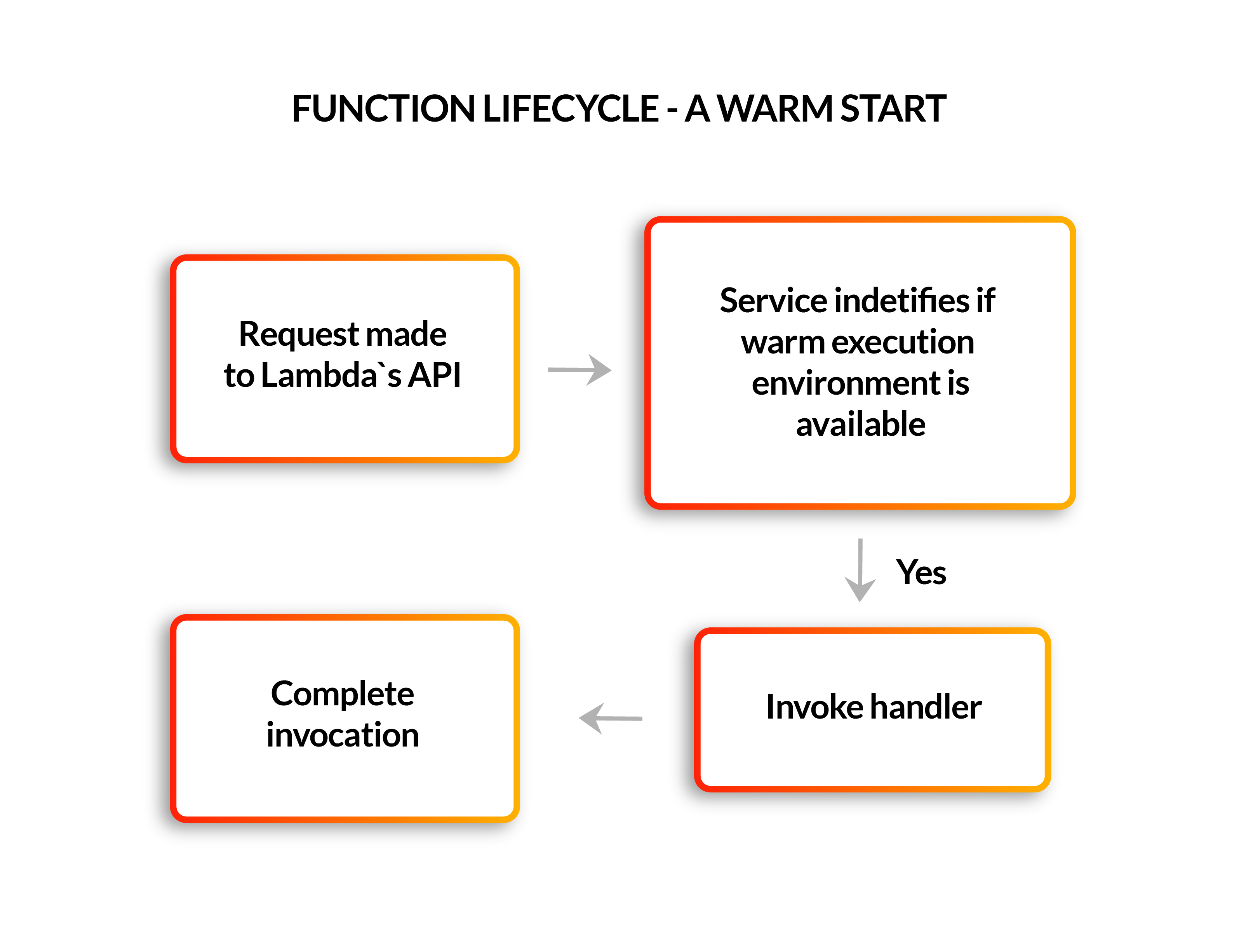

Let’s take a look at what cold and warm starts are. There is a distinct difference between a warm start and a cold start of the function in a function lifecycle flow. All requests come into the Lambda service via the Amazon API Gateway. The Lambda service first checks to see if a pre-created execution environment is available. If that is the case, it means that function has been executed recently. It is called a warm start because the execution environment is ready. Next, Lambda invokes the handler and completes the execution. So how is this different from a cold start?

In the cold start, since there is no warm execution environment available, there are additional steps needed. First, the service must find an available compute resource and then download the function code. It launches and prepares the execution environment and executes the initialization code accordingly (initialization code is the code outside of your handler). Finally, it invokes the handler and completes the invocation. When cold starts occur, the time taken varies from under 100ms to over 1s. It takes a lot more time than during a warm start, doesn’t it? It is named a cold-start latency.

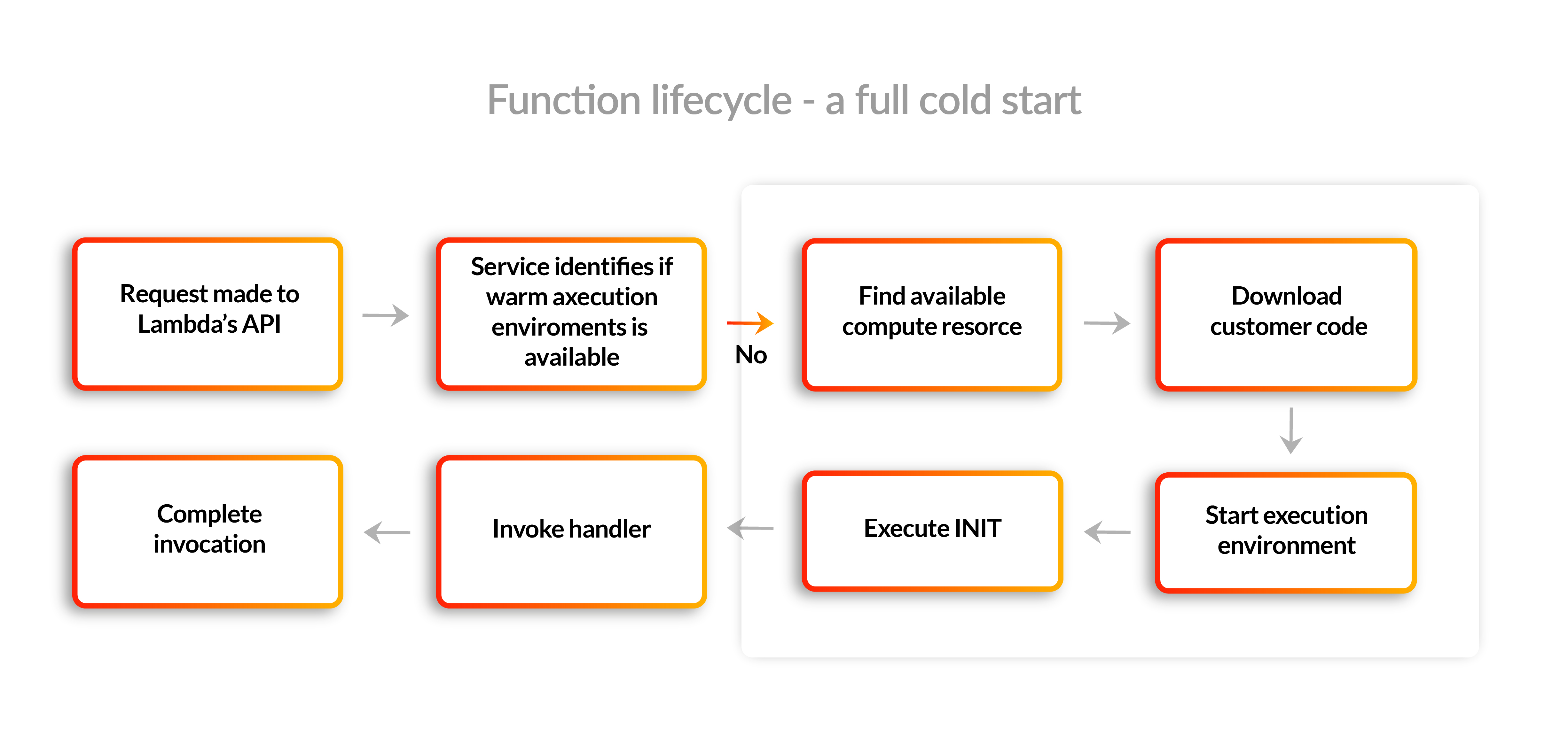

Provisioned Concurrency aims at solving 2 sources of cold-start latency. Firstly, the set-up of the execution environment occurs amid the provisioning process instead of the execution process. Secondly, there is no unneeded initialization in the following invocations. Initialized environments are hyper-ready to react in double-digit milliseconds.

If we go back to our function lifecycle flow, this is what happens when those execution environments are pre-created. Initially, the function is configured to use Provisioned Concurrency. Next, Lambda finds available compute resources for the needed capacity. It downloads the function code into each of those execution environments and prepares the environments. Most importantly, it executes the initialization code. Both components of the cold start, the creation of the execution environment, and the running of the customer initialization code are addressed by using Provisioned Concurrency. Since the environment is prepared in advance for the function usage, you no longer need to be concerned by the period required for your static initializers.

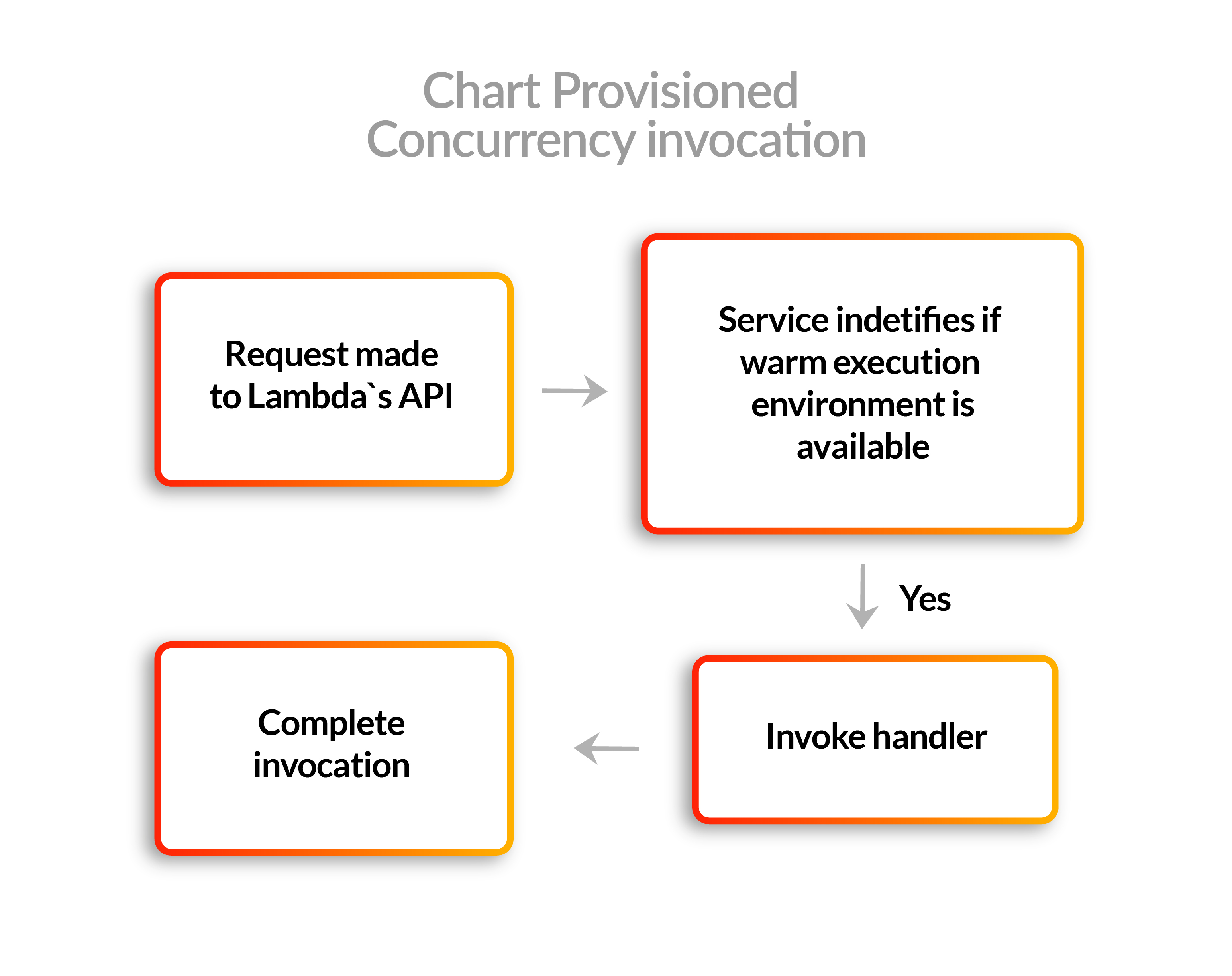

After the execution environments are prepared with Provisioned Concurrency, every function invocation follows the path below. You’ll recognize the flow from the warm start earlier. So every invocation in Provisioned Concurrency is a warm start.

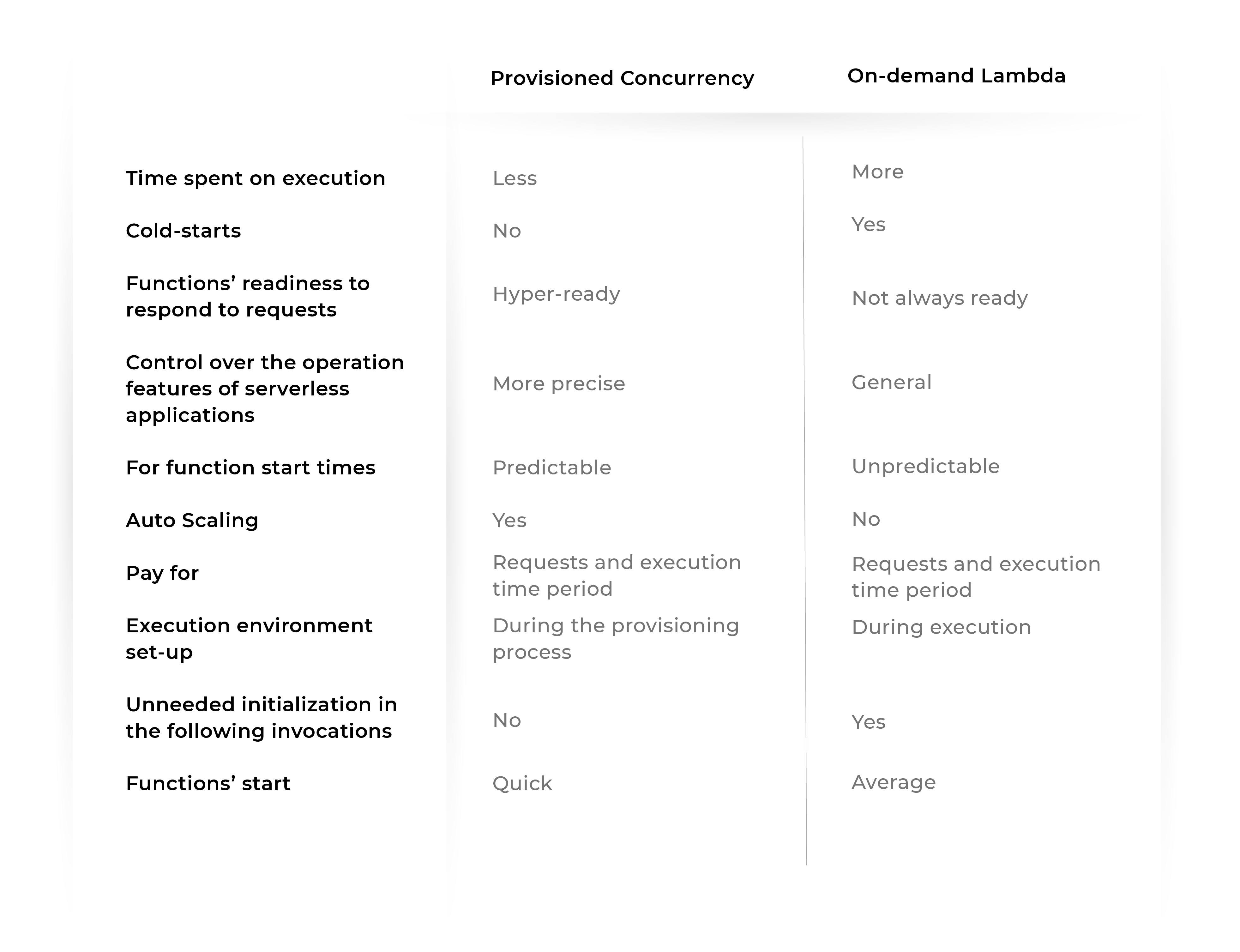

Sounds promising, isn’t it? But what’s the difference between Provisioned Concurrency and On-demand Lambda and why is the first option considered as a better solution?

Why Provisioned Concurrency is better than On-demand Lambda

In the table above you can see a clear difference between AWS Lambda Provisioned Concurrency and On-demand Lambda and why the first is way better than the second. Despite such a difference, these two types of Concurrency can be combined to perform together. When you launch concurrency on functions, the Lambda service loads execution environments for code running. If you surpass the level, you can either pick an option to turn down further invocations or let any extra functional invocations run on the on-demand model. This can be accomplished by placing the per-function concurrency limit.

Reserved Concurrency

In an Amazon account, you have access to a certain amount of Provisioned concurrency runs Lambdas, based on your Region. For swift work, the system reserves 100 Lambdas Provisioned concurrent executions, giving you access to a fewer amount of Provisioned concurrency runs lambdas for your Provisioned Concurrency. Why? Because you need to be sure that regular Lambdas will also be executed. How will they be executed when all the Provisioned concurrency executions are occupied by Lambdas based on Provisioned Concurrency? If you require more Lambdas, you can get them by sending a request to Amazon Support Center Console. Based on AWS Lambda documentation from Amazon, the number of concurrent executions can be increased up to hundreds of thousands.

Burst Concurrency limits

Your functions’ concurrency is a sum of instances that handle requests at a particular time. For a primary burst of traffic, your functions’ increasing concurrency in a Region can come to an initial level of 500 - 3000, depending on Region.

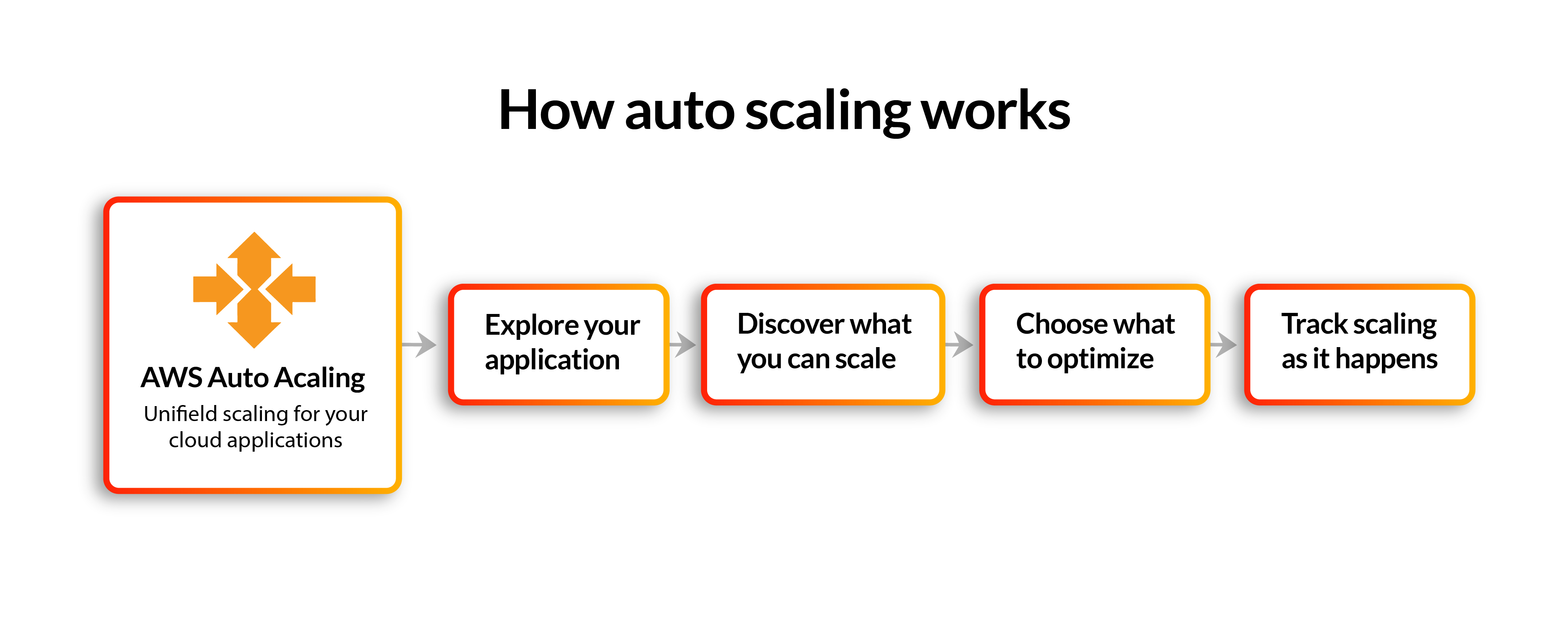

Auto Scaling

AWS Auto Scaling controls your applications and automatically corrects the capacity to keep predictable, steady performance at the lowest possible price. Auto Scaling builds all of the scaling policies automatically and designates targets for you depending on your choice. Costs, availability, or a balance of both can be optimized (see optimization). With AWS Auto Scaling, your applications will constantly get good resources at a proper time. To learn more about how Auto Scaling works as well as about scheduled and workload Auto Scaling, read our article on this topic.

Amazon RDS Proxy

Many customers have petabytes of data in relational databases, so they may use Lambda functions to access that data. One of the problems is that relational databases are connection-based. Plus, they are typically used with the pool of servers with long periods of uptime. So when you use Lambda functions to scale this up, this may overwhelm the database with the connection requests. Connections are also a memory on database servers so this can impact performance too. As Lambda functions are ephemeral, this kind of connection to a database isn't really ideal. So the RDS Proxy was launched by Amazon as a solution to this problem. You simply point your Lambda function at the Proxy rather than at the database. Therefore, the Proxy will intelligently manage the database connections. You can make your applications more resilient to database failures too and help improve your security posture thanks to some of the features bundled with the service. RDS Proxy is very easy to implement and it can really help match the way that scalable compute works with traditional database architectures.

Optimization

If you would like to observe the performance of your function more closely, use the AWS X-Ray for that function.

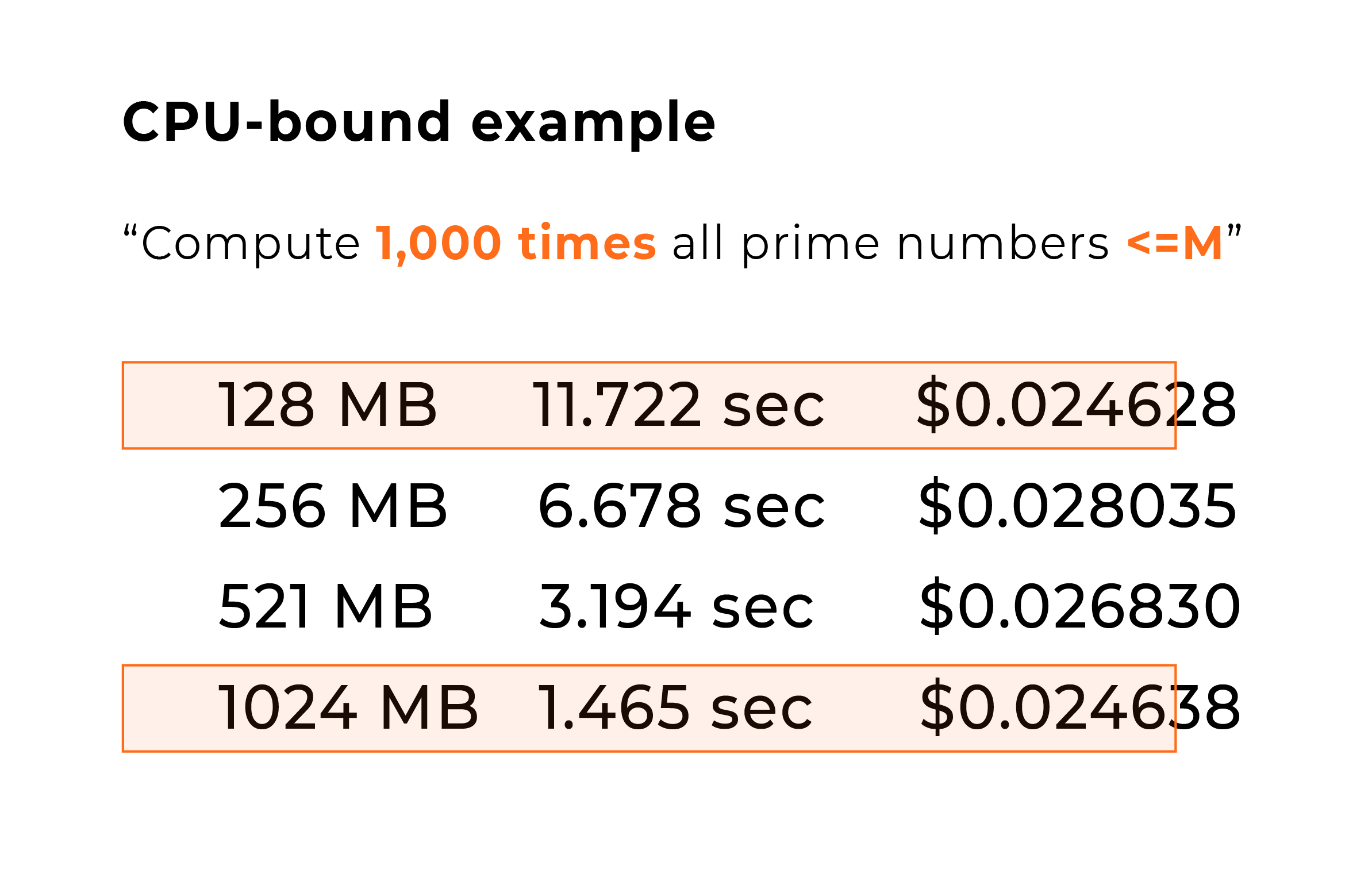

One lever that you have available in a Lambda function is memory. Lambda exposes a memory control only, and you can select between 128mb of RAM and 3008mb for your function. If you expand the memory, it proportionally increases the amount of CPU core and network capacity allocated to the function. Many developers leave the memory set to the default 128mb, and that is fine for very simple functions. But if your code is CPU Network or memory bound, then changing the memory setting can dramatically improve the performance in general. In fact, it may even be cheaper! Let’s look at the example below.

Here is an example of the CPU-bound function. It computes all of the prime numbers up to 1 million. When allocated 128mb, this takes 11.722 seconds. As we increase the memory at 256mb, that drops to 6.678 seconds, and then it drops to 3.194 seconds for 512mb. And finally, at 1024mb it only takes 1.465 seconds. So while it’s probably no surprise that it’s faster, what might surprise you is that the costs are about the same within a thousandth of 1 cent. Why is that?

Lambda billing is based on gigabyte seconds, so you can see that more memory is sometimes cheaper if the overall execution time is substantially less. Or at least the cost might be comparable as in this example. You are able to test Lambda functions to find out the lowest prices possible. Obviously, running tests on every single function with different memory allocations is very time-consuming, so, fortunately, there is an automated way that you can do this.

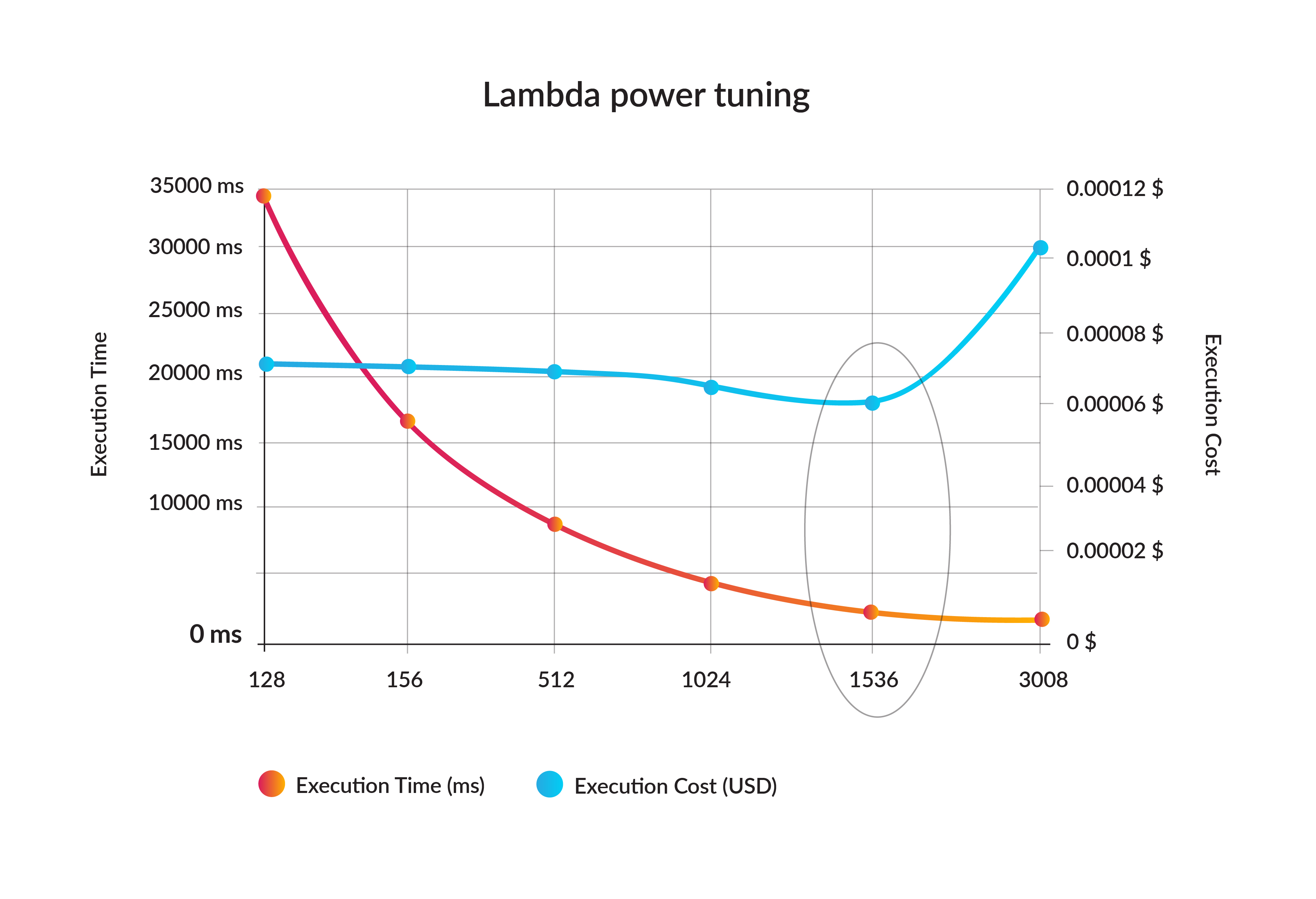

AWS Lambda Power Tuning is an open-source tool that helps you visualize and fine-tune the power and memory configuration of Lambda functions. It was created as a simple method to find a balance of memory, speed, and cost in an automated way. The application uses the AWS Step Function state machines to run multiple concurrent versions of the function at different memory allocations and measure how they perform. Then it offers you the best options to either maximize performance or minimize cost. The input function will be executed in your AWS account and it performs real HTTP calls, SDK calls, cold starts, etc. The state machine supports cross-region invocations as well, and you can set up parallel execution to get results in just a few seconds. You can implement a CI/CD process around this too to automatically run this analysis as you build new functions. Let’s take a look at an example of how you can optimize your functions with AWS Lambda Power Tuning. The chart shows the results of 2 CPU-intensive functions, that become faster AND cheaper as more power is added. Interpretation: execution time drops from 35s with 128MB to less than 3s with 1.5GB, while being 14% cheaper to run.

Provisioned Concurrency and you

As you have read already, Provisioned Concurrency is a new feature in Serverless, and it helps eliminate cold starts for your functions. We can help you with building applications with Serverless Architectures, power tuning your Lambdas, and optimize speed or cost, and more. Contact us for more information and if you are ready to start making better choices for your applications with Provisioned Concurrency.